Intro

This is a post about one of my favorite project: a deep learning recommendation system learn from both user-behavior and item context. The main credit of this system is that:

- It’s a single system learn from both user behavior & item context, thus good in both accuracy and recall, and immune from cold-start.

- To predict with MM level itemXuser combinations, we designed a special architecture to make online prediction super cheap by leveraging ANN (approximate nearest neighborhood).

Here’s the slide:

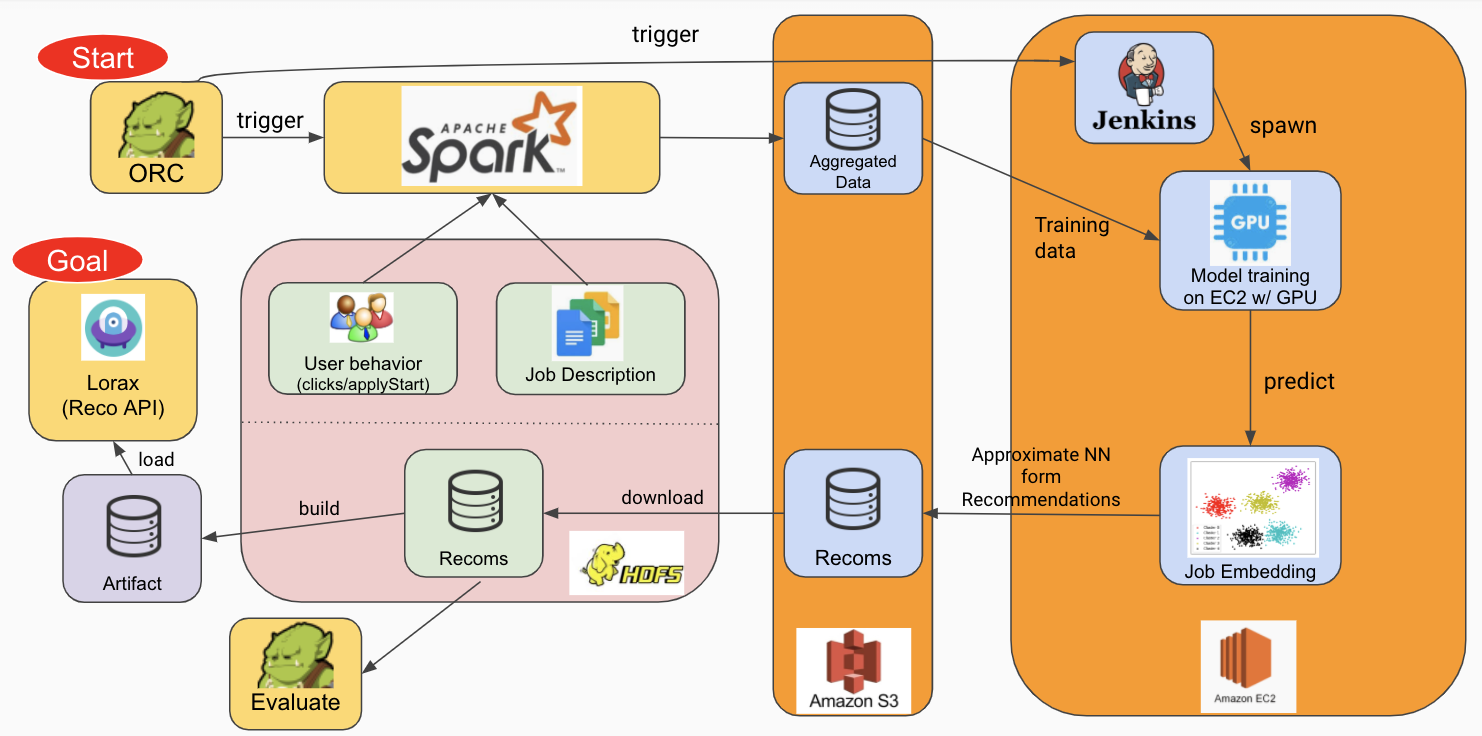

Architecture

The whole architecture is like this. It looks fancy, and took me quite a lot of effort struggling through whole bunch of infra troubles, LOL.

Key concept

- Learn user behavior through a graph model: [item2vec with global context][ref1], which is the 2018 best paper proposed by AirBnb.

- Extend this template converting user session to binary classification, use siamese-network to learn from item context.

- On production for predicting on the fly, we use cached item-embeddings and user-embeddings calculated offline to do the prediction, this largely reduced the computing complexity, making neural network model results could be used online for millions users x millions items scale product.

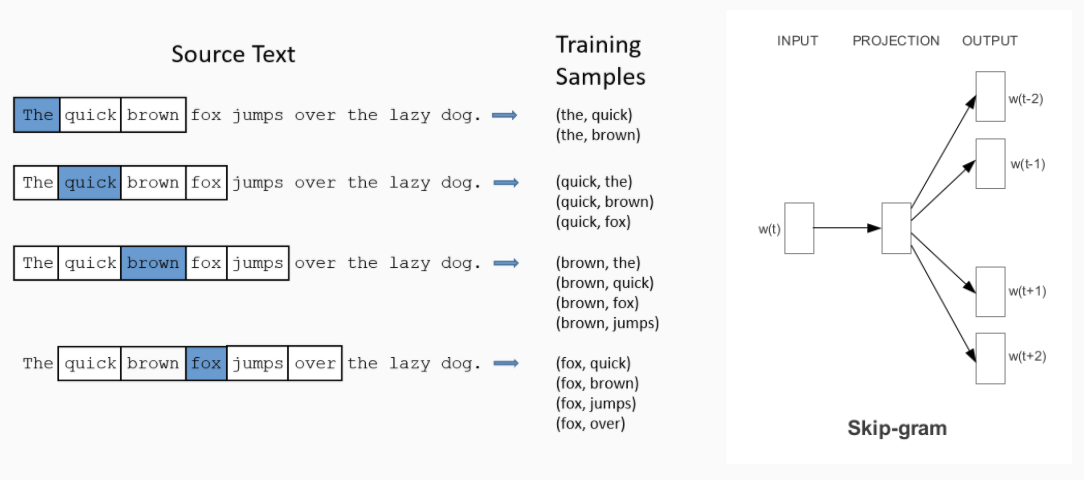

Item2Vec

This model actually comes from word2vec, just treat the user session as a sentence and each item visited as a work token, then you could apply the skip-gram model like work2vec.

After all, the item2vec helped us transfering the recommendation problem into a binary classification problem. The training process is actually a way to formulate the item embeddings and define the “distance” among items.

Airbnb improved this model by introducing the idea of “global context”, which enable us to treat clicks event and paying event in different weight. Since this two kinds of user behavior is having very different level of interest to the item.

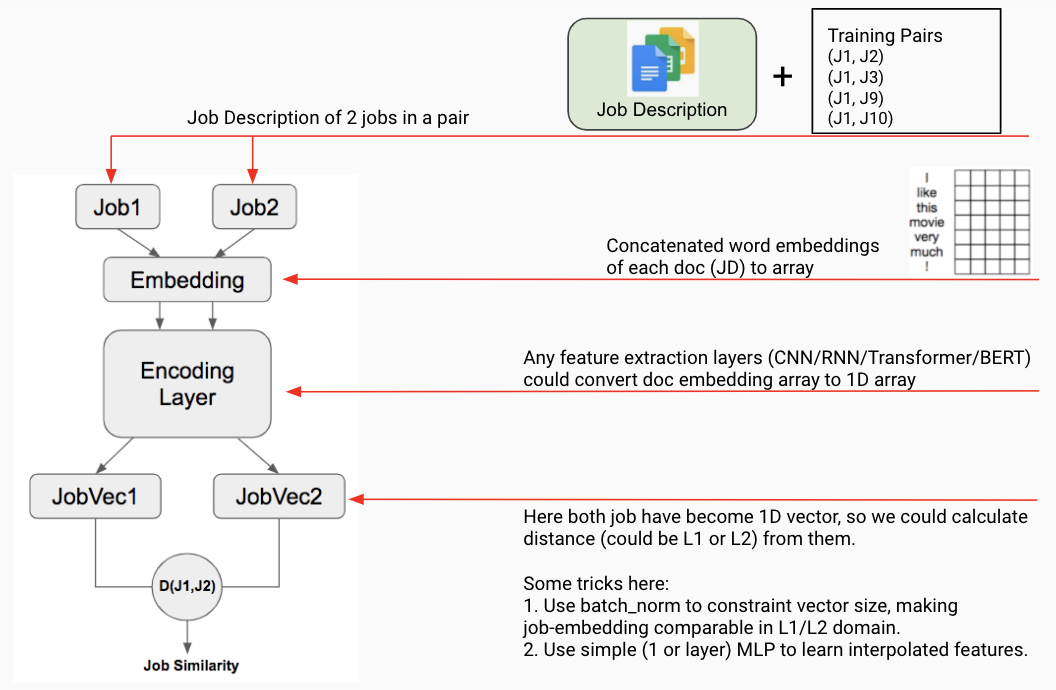

Siamese Network

Finally, with the help of item2vec concept, we could switch the binary classification with siamese-network, so we could also incorporate with item context into our model. That’s how we learn from both user-behavior and item-context in a model, end-to-end.

Here I use cnn as the encoder, since its fast and having good performance. Feel free to switch the encoder to any fancier stuff if you have enough budget for the extra computing power :p